Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

I resale from Openiai, Google Deepmind, Anttropica, and a cooked investment in the techniques of their named modes post card Tuesday published.

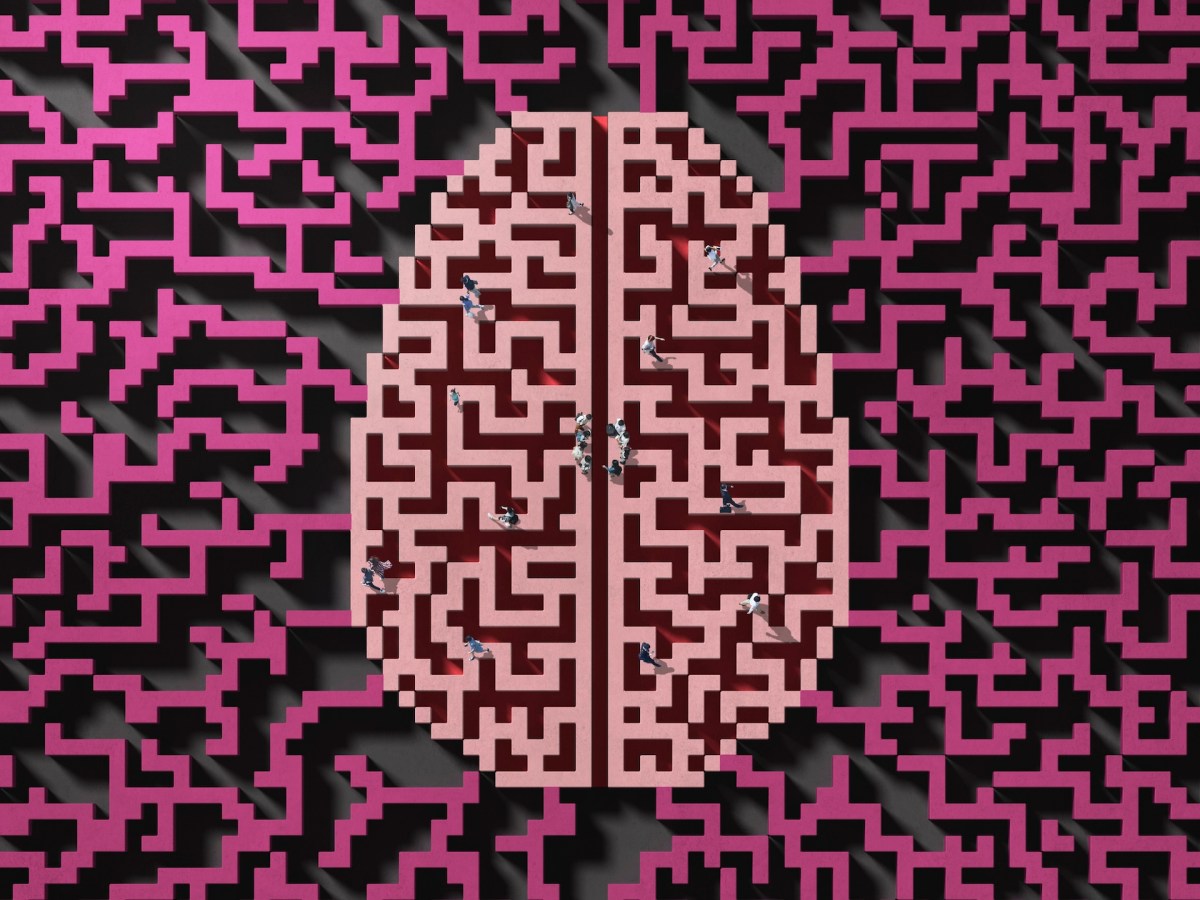

A key function of reasoning models ai, as O3 of Openai and it R1 of Deepseekare their chains – of the thought or cots – an external process in which models they work through the problems, similar to humans use a scrap of waste to work a difficult math request. Reasoning models are a heart technology for the agents of the cards could be a heart method to maintain the heart agents under control and able.

“A cooked monitoring a valuable measurements for boundaries Ai, offer a rare look like agents you make decisions, they said the researchers. “However, no carant that the actual visibility allowing the community in search and the best job you have to make the best use of cot monitorability and study as it can be preserved.”

The model’s develors’ develors to study what makes the cots, which factors are reaching out to the cooked models may be in charge, attended against any interventions that may reduce their transparency or reliable.

The document authors also call the developers ai to follow the monitorability and study as the method may be implemented a day as a security measure.

Notable Signatories includes German LONNA CEO OLDA Councils, Nobel Eyed and Apollo, and Other Lords come, Amazon, and UC Berketey.

The paper brand a moment of unit between the does of the AI, ai in an attempt to caution around the safety of ai. Comes at a time when technical businesses are caught in fierce competition – which has brought Meta to poor researchers From Opena, Google Deepmind, and Otropp with millions of million millions. Some of the most looking for the most searchers after they are those of the air building and the models of reasoning AI.

“You’re in this critical time where we have this new chain. It seems really concentrate, for me, for me, for me, it’s a messy. To get more research on this topic on this topic.

Open posted published a pattern’s forecast of AI, O3, in months of September that shows: Google conceent’s technology capacity on similar income in benchmarks.

However, there is relatively little understanding about how the models of reasoning AI. While labi have excellented in improving the do you have the course of the high school, that does not necessarily translate to a better understanding of how much you reach their answers.

Antropic was one of the heads of the heads of industry in which the patterns are really working – a field called interpretability. Before this year, CEO Dario Amodei has announced a The commitment to the crack open the black box of the models to 2027 and invest more in interpretability. He called on Oreai and Google Deepmind for the Further’s Person of the Most, Even.

Anticipated search from anthropic indicated that I cots cannot be a completely reliable indication of how much their models arrive in responses. At the same time, the viewers of Openai said that cot monitor could one day be a reliably way to follow alignment and safety in the patterns.

The purpose of the Points Like This WISD to sign the signal and attract more attention to the nascent areas of research, as the pattern of cooking. Committee, google defended, and anthropic are already taken these subjects, but it is possible for more partment and research in space.