Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Stanford University researchers paid 1,052 people $60 to read the first two lines The Great Gatsby to an app. It did, an AI that looked like a 2D sprite from a Final Fantasy game from the SNES era asked the participants to tell their life story. Scientists have taken these interviews and crafted them into an AI that they say replicates the participants’ behavior with 85% accuracy.

The study, titled Generative agent simulations of 1,000 peopleis a joint venture between Stanford and scientists working for Google’s DeepMind AI research lab. The pitch is that creating AI agents based on random people could help politicians and companies better understand the public. Why use focus groups or survey the public when you can talk to them once, spin up an LLM based on that conversation, and then have their thoughts and opinions forever? Or at least a closer approximation of those thoughts and feelings as an LLM is able to recreate.

“This work provides a foundation for new tools that can help investigate individual and collective behavior,” said the paper’s abstract.

“How might, for example, a different set of individuals respond to new policies and public health messages, react to product launches, or respond to major shocks?” The paper continued. “When simulated individuals are combined into collectives, these simulations could help pilot interventions, develop complex theories that capture nuanced causal and contextual interactions, and expand our understanding of structures such as institutions and networks in domains such as economics , sociology, organization and political science.

All possibilities based on a two-hour interview fed into an LLM answering questions mainly like their counterparts in real life.

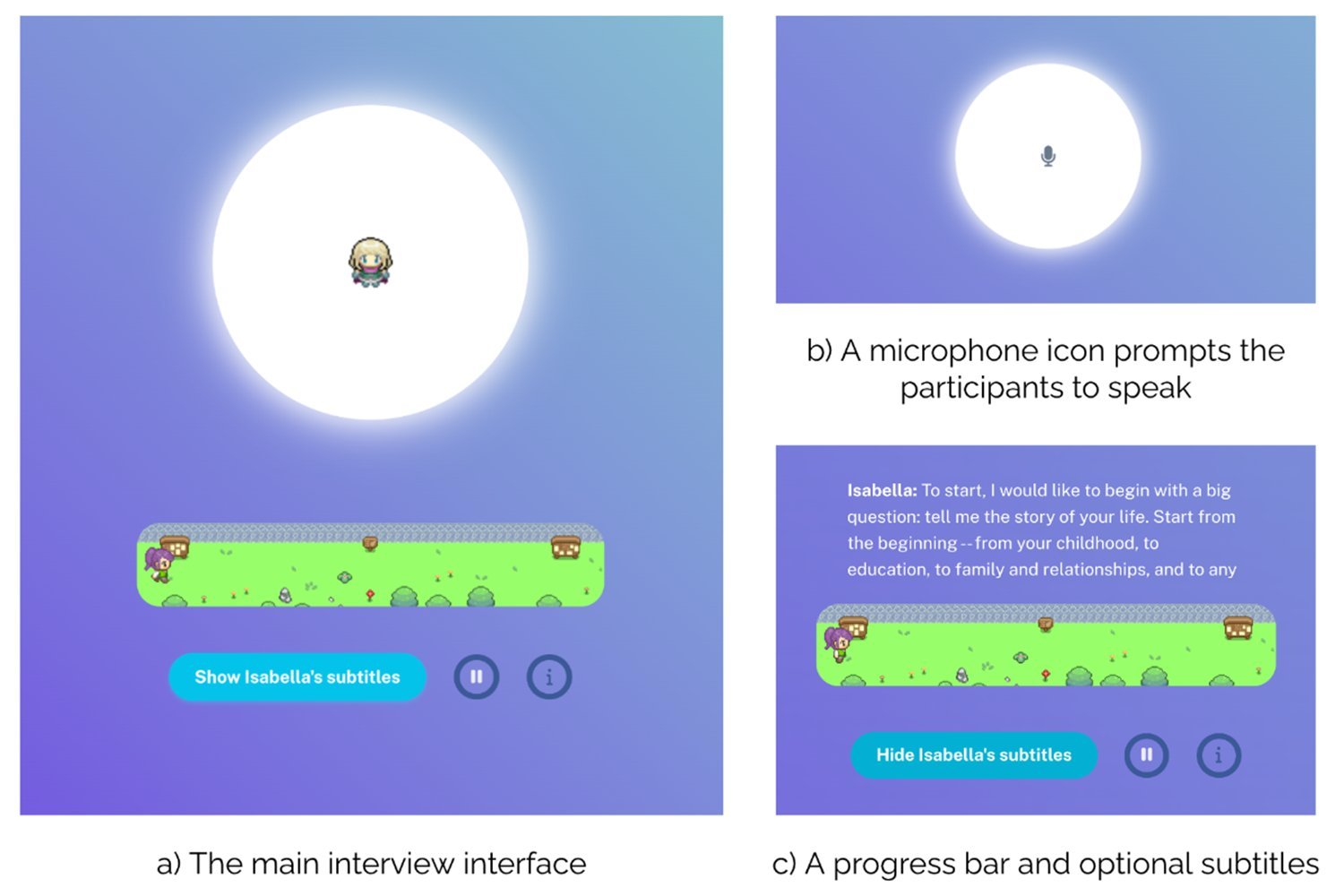

Most of the process has been automated. The researchers contracted Bovitz, a market research firm, to gather the participants. The goal was to get a broad sample of the US population, as broad as possible when it is limited to 1000 people. To complete the study, users signed up for an account in a specially designed interface, made a 2D sprite avatar, and started talking to an AI interviewer.

The interview questions and style are a modified version of that used by the American Voices Project, a joint project of Stanford and Princeton Universities that interviews people across the country.

Each interview begins with the participants reading the first two lines The Great Gatsby (“In my younger and more vulnerable years, my father gave me some advice that I’ve replayed in my mind ever since. ‘Whenever you feel like criticizing someone,’ he told me, ‘just remember that all the people in this world did not have the advantages that you had.'”) as a way to calibrate the audio.

According to the document, “The interview interface displayed the 2-D sprite avatar representing the interviewer agent in the center, with the participant’s avatar shown in the background, walking toward a position of purpose to indicate progress When the AI interviewer agent spoke, it was signaled by a pulsating animation of the center circle with the interviewer’s avatar.

The two-hour interviews, on average, produced transcripts that were 6,491 words in length. He asked questions about race, gender, politics, income, social media use, the stress of their jobs and the makeup of their families. The researchers published the interview script and the questions the AI asked.

Those transcripts, less than 10,000 words each, were then fed into another LLM that the researchers used to spin up generative agents aimed at replicating the participants. The researchers then put the participants and the AI clones through more questions and economic games to see how they compare. “When an agent is interviewed, the entire interview transcript is injected into the model prompt, instructing the model to imitate the person based on their interview data,” the document said.

This part of the process was as close to controlled as possible. Researchers have used General social survey (GSS) and the Big Five Personality Inventory (BFI) to test how well LLMs match their inspiration. He then took participants and LLMs through five economic games to see how they compared.

The results were mixed. AI agents answer about 85% of questions in the same way as real-world participants on the GSS. They hit 80% on the BFI. The numbers fell when the agents started playing economic games, however. The researchers offered real-life participants cash prizes for playing games like The prisoner’s dilemma and The dictator’s game.

In the Prisoner’s Dilemma, participants can choose to work together and both succeed or destroy their partner for a chance to win big. In the dictator game, participants have to choose how to allocate resources to other participants. Real-life subjects were making more money than the original $60 to play these.

Faced with these economic games, AI clones of humans haven’t even replicated their real-world counterparts. “On average, the generative agents achieved a normalized correlation of 0.66”, or about 60%.

The entire paper is worth reading if you’re interested in how academics think about AI agents and the public. It didn’t take long for the researchers to uncover the personality of the human being in an LLM who behaved in a similar way. Given time and energy, they could probably bring the two closer together.

This worries me. Not because I don’t want to see the ineffable human spirit reduced to a spreadsheet, but because I know that this type of technology will be used for the sick. We’ve already seen dumber LLMs trained in public records trick grandmothers into giving bank information to an AI relative after a quick phone call. What happens when those machines have a script? What happens when they have access to custom-built personas based on social media activity and other publicly available information?

What happens when a corporation or a politician decides that the public wants and needs something based not on their spoken will, but on an approximation of it?