Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

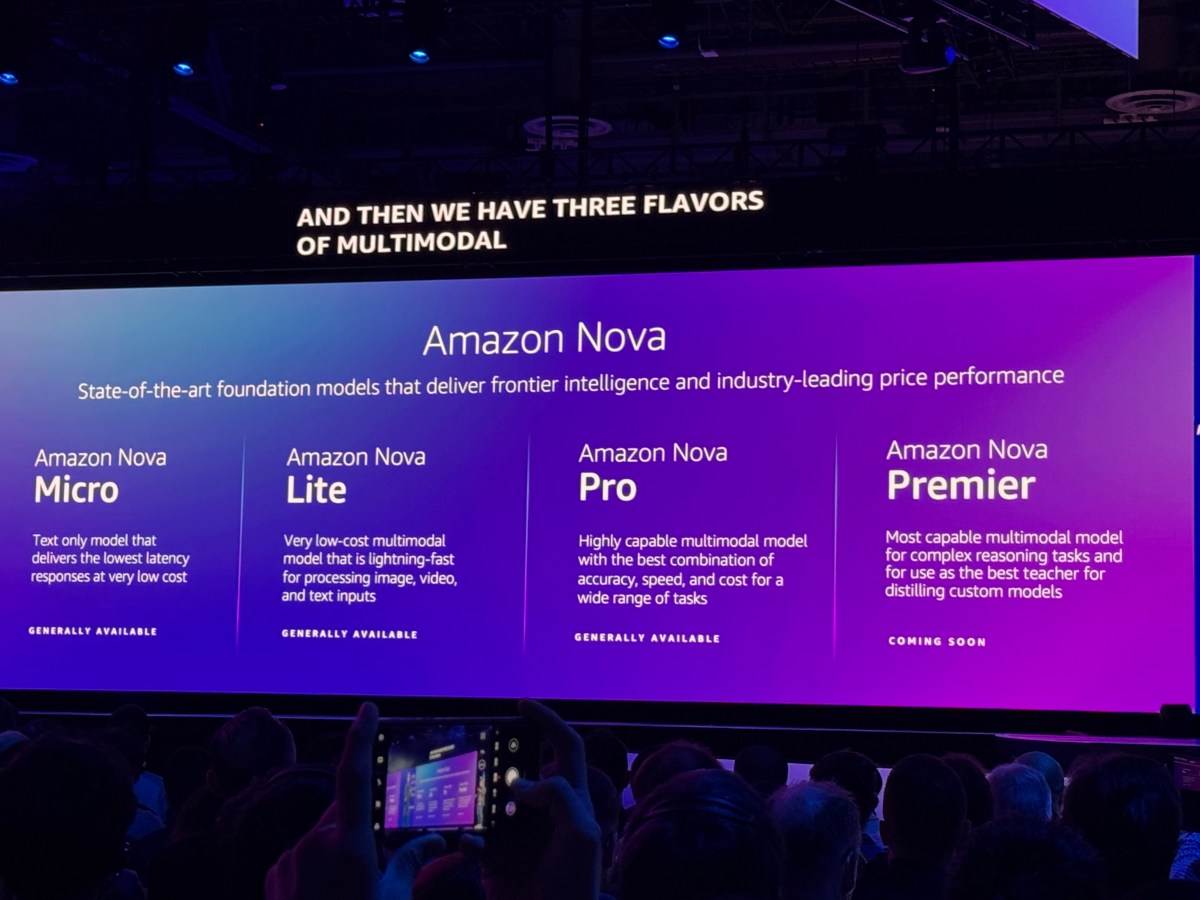

Amazon the Wednesday released What’s the company’s revenue is the most capable ratelel in his new, new award family.

New Premier, who can process the text, and video (but not audio) is available in the Model Development Projection “that” demand profounds of contexts through several displayed instruments and prickers. ‘

Amazon announced his New lines of models In December to his annual Aws Re: Invention conference. During the last few months, the company has extended the collection with the generant and videos and video and videos audio along and it Agentic, task facility. I am

New Premier, who has a 1 million context, you can analsezes around once, is shot on the patterns of certain benchmarks that banders as Google. On the sw-bench bank, a coding test, premier is behind google Gemini 2.5 proAnd it also makes little on the becchmarks akusenza of math and science, gpqa Diamond and Aime 2025.

However, in a bright pases of premier, the model, the pattern, they know each other acknowledge visually, simple of benchmark in the internal of Amazon.

In LEDRock, Premier is $ 2.50 for 1 million powered tokens in the model and $ 12.50 for 1 million tokens generated by the model. It’s around the same price as 2.5 pro, which cost $ 2.50 per millions of entry tokens and $ 15 per millions of output.

Important, Premier is not a “reasoning” model. Instead of models as open O4-but and deepseek R1cannot take additional time and fulfill to consider correctly and check their answers to the questions.

Techcrunch event

Berkeley, ca

| 0.

5th of June

Amazon is prituching pritizing as best to “Teaching” Models via distillation – in other words, neglect their case for an official frame.

Amazon see you like still more heart to their general growth strategy. The CEO andy Jassy said that the company is to build over 1,000 ai you have it is growing in the “reposting an antina-bill respects respect. ‘