Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

The original version of this story appeared in How much magazine. I am

Great language patterns work well because they are so great. The latter patterns from Openai, meta, and deepse use a millions of “Parameters” -anmen connections between the data and re-enter the training training process. With more settings, models are better than identifying patterns and connections, that in turn they make them more powerful and accuracy.

But this power comes in a cost. The training of a pattern with hundreds of parameters takes huge computational resources. To form their gemini 1.0 ultra model, for example, Google reported $ 191 million. I am Great language models (llms) they need computational power considerable every time they respond to a question, which makes them the hogs energetic notors. A single question to the chatgpt consumes about 10 times How much enerce as a single Google research, depending on the Electric search Institute.

In response, some researchers are now thinking small. IBM, Google, Microsoft, and Openaie have released all of the recent language models (slm) using a few millions settings.

Small models are not used as the overall goal harness as their larger cousins. But they can excels on the specific functions defined, as fat as conversations, answer the patient’s questions as a clever chuck. “For a lot of functions, a pattern of parameter 8 billion is really good”, he said Zico koltera scientist computer to the carnegie University University. They can also run on a laptop or mobile, instead of huge data center. (There is no consent on the exact definition of “small”, but the new models all max out of the 10 billion settings.)

To optimize the training process for these small patterns, researchers use a few tricks. Great models frequently scarce raw training data from the internet, and these data can be disorganized, messy, hard to process. But these big patterns can then generate high quality data which can be used to form a small model. The approach, called distillation, obtain the larger model to effectively move to their training, as a teacher that gives lessons to a student. “The reason (slms) get so good with such patterns and these little data, use high-quality data instead of messy things”

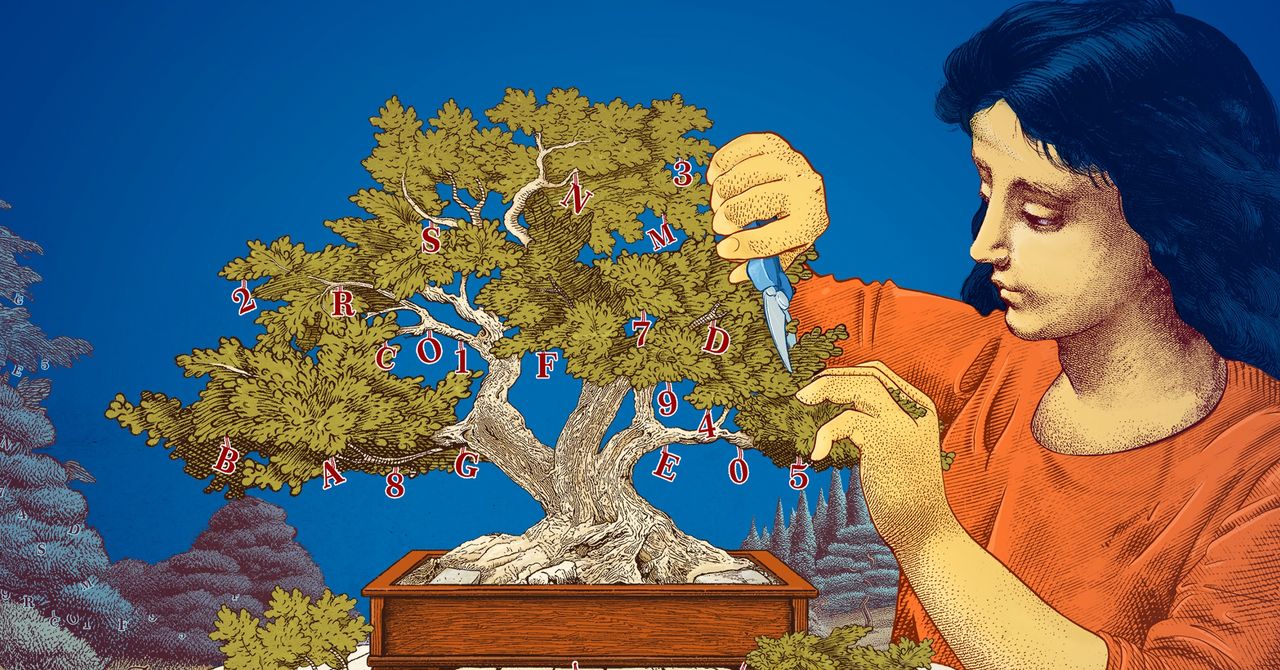

Circumors also exploded ways to create small models starting with great and cut. A method, known as the progress, implies shortcut or inefficient parts of the The neural netore-The sprawling of data points connecting to underline a large model.

Pruning was inspired by a neural network, the human brain, which earns efficiency to snipping connection between samples as a person. The approach of today’s worth returning back to a 1989 card In which SCIIENT Yann Yann Yanning computer-supported this to 90 percent of parameters in a black network could not be sacrifice. Called the method “optimal brain damage.” The prison can help the slain researchers a tongue pattern for a particular task or environment.

For researchers interested in terms of tongue patterns make things, smaller models offer a non-cost mode to try nine ideas. And because they have less settings that great templates, their reasoning can be more transparent. “If you want to make a new pattern, you need to try things,” he said The leshena search scientist in the Mit-IBM Labor. “Small models allow the researchers to experience with the lower palets.”

The great, dear patterns, with their always increasing parameters, they remain useful for applications as the general chatbots, and Discovery drug. I am But for several users, a small, a pattern, a little pattern also works, while being easier for researchers for the train and construction. “These effective patterns can save money, time, and calculation”, he said chosen.

Original story Restarted with permission from How much magazine, an independent independent publication of the SIMON FOUNDATION To which mission is to strengthen public knowledge of science covering the research developments and tendencies in math and life sciences.